INTRODUCTION

Usually, we will be loading the data into hive table from HDFS file system while creating the table.

This post will help to insert values into hive table directly.

ARRAY COLUMN

Create Table Query

DROP TABLE IF EXISTS student;

CREATE TABLE student(id STRING, name STRING, class STRING, subjects ARRAY<STRING>);

|

The latest hive version will not support adding the values directly using below query.

INSERT INTO student (id,name,class,subjects) VALUES ('100','Ranjith','Mathematics',ARRAY('Mathematics','Biology','Physiscs','Chemistry')

|

But we can use STACK function to insert here.

INSERT INTO TABLE student

SELECT STACK(3,

'100','Ranjith','Mathematics',ARRAY('Mathematics','Biology','Physiscs','Chemistry'),

'200','Sekar','Computer Science',ARRAY('Mathematics','Computer Science','Physiscs','Chemistry'),

'300','Bala','Commerce',ARRAY('Political Science','Accounts','Commerce')

)

FROM empinfo

LIMIT 3;

|

Result

MAP COLUMN

Create Table Query

DROP TABLE IF EXISTS stud_mark1;

CREATE TABLE stud_mark1(id STRING, name STRING, class STRING, mark MAP<STRING,INT>);

|

Insert query.

INSERT INTO TABLE stud_mark1

SELECT STACK(2,

'100','Ranjith','Mathematics',map("Mathematics",78,"Biology",88,"Physics",89,"Chemistry",90),

'200','Sekar','Science',map("Botany",71,"Zoolagy",98,"Physics",79,"Chemistry",89)

)

FROM empinfo

LIMIT 2;

|

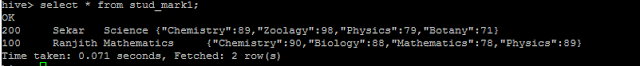

Result